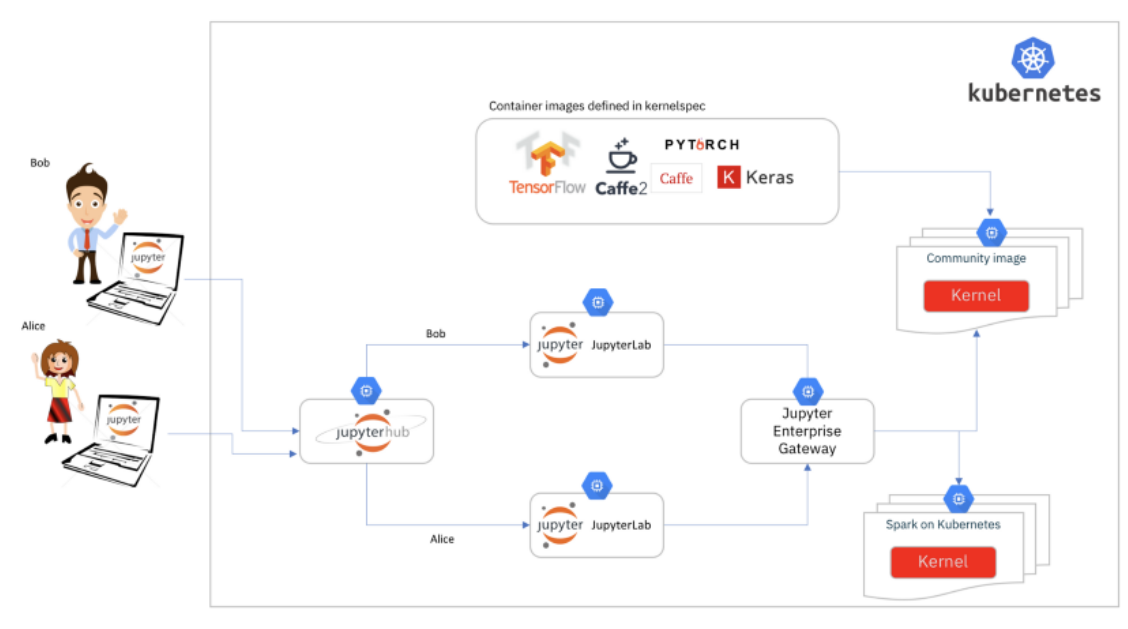

Demonstarte steps to realize Jupyter notebook as a service using Jupyter Enterprise Gateway(JEG) & Jupyterhub (JHub) to realize separation of Jupyter server (backend of JupyterLab) and (computation) Kernels.

Setup a NFS server

Assume that a NFS server (IP: 172.17.0.1) exports a share at the path: /home/nfs_share .

Setup single node Microk8s cluster on Ubuntu machine

Create namespaces

kubectl create namespace enterprise-gateway

kubectl create namespace jupyterhub

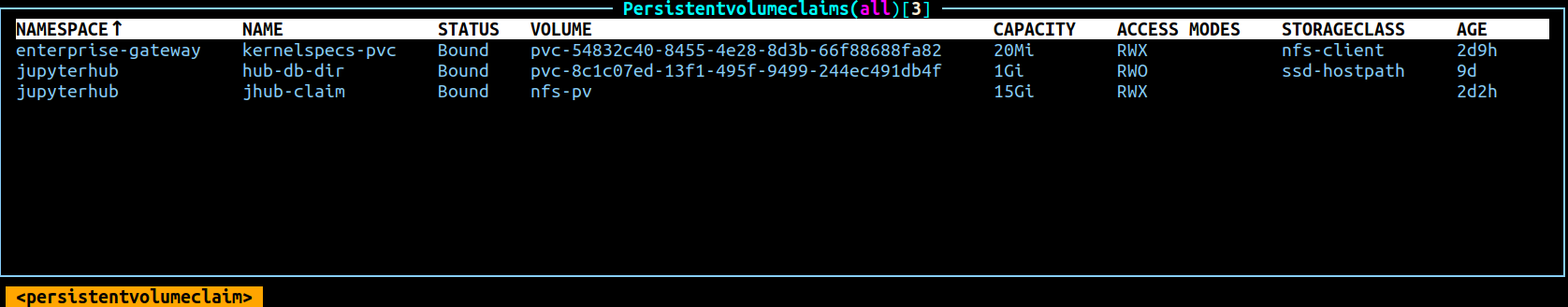

Create PV & PVCs

Use yaml file jhub_pvc.yaml to create :

Contents of jhub_pvc.yaml

kind: PersistentVolume

apiVersion: v1

metadata:

name: nfs-pv

labels:

app: mission-control

type: nfs-volume

spec:

capacity:

storage: 15Gi

accessModes:

- ReadWriteMany

nfs:

server: 172.17.0.1

path: "/home/nfs_share/claim"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: jhub-claim

namespace: jupyterhub

labels:

app: mission-control

type: nfs-volume

spec:

storageClassName: ""

accessModes:

- ReadWriteMany

resources:

requests:

storage: 15Gi

Use yaml file kernelspecs_pvc.yaml to create pvc kernelspecs-pvc in namespace enterprise-gateway : 20MB nfs share allocated from nfs-client storage class to store kernelspecs.

Contents of kernelspecs_pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: kernelspecs-pvc

namespace: enterprise-gateway

spec:

storageClassName: "nfs-client"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 20Mi

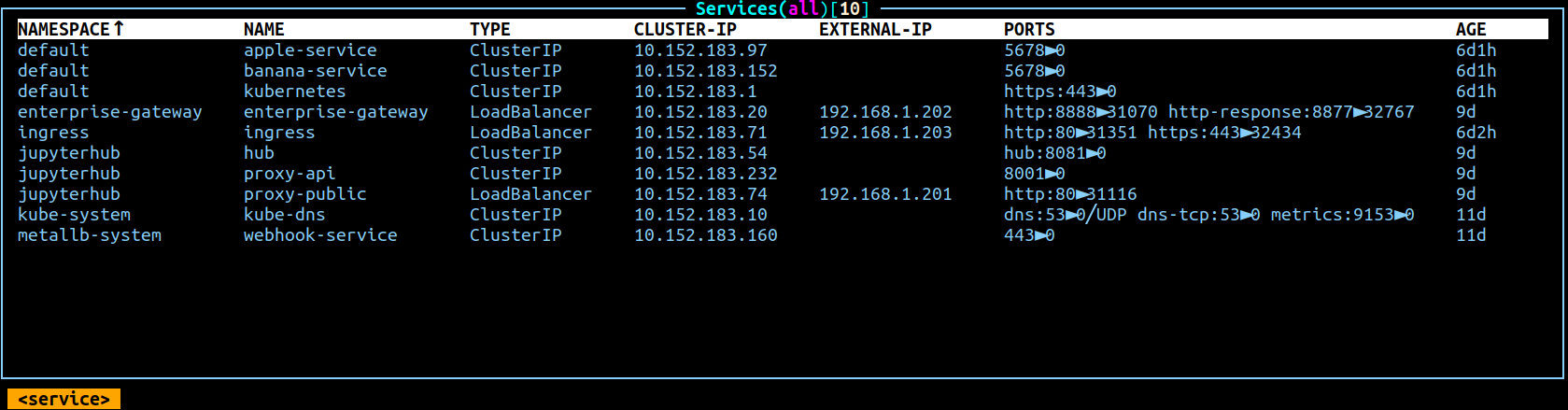

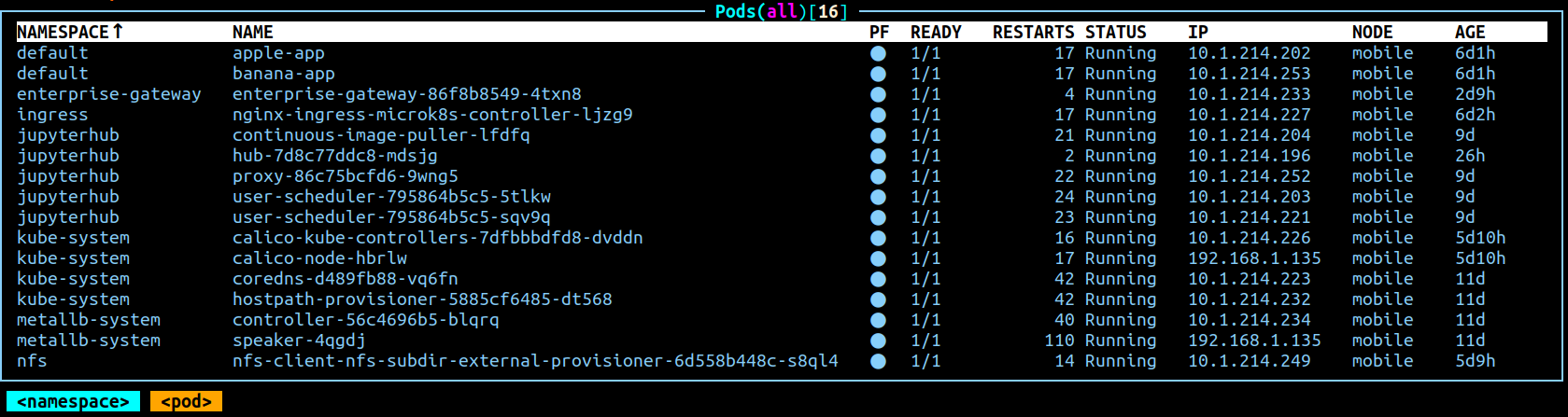

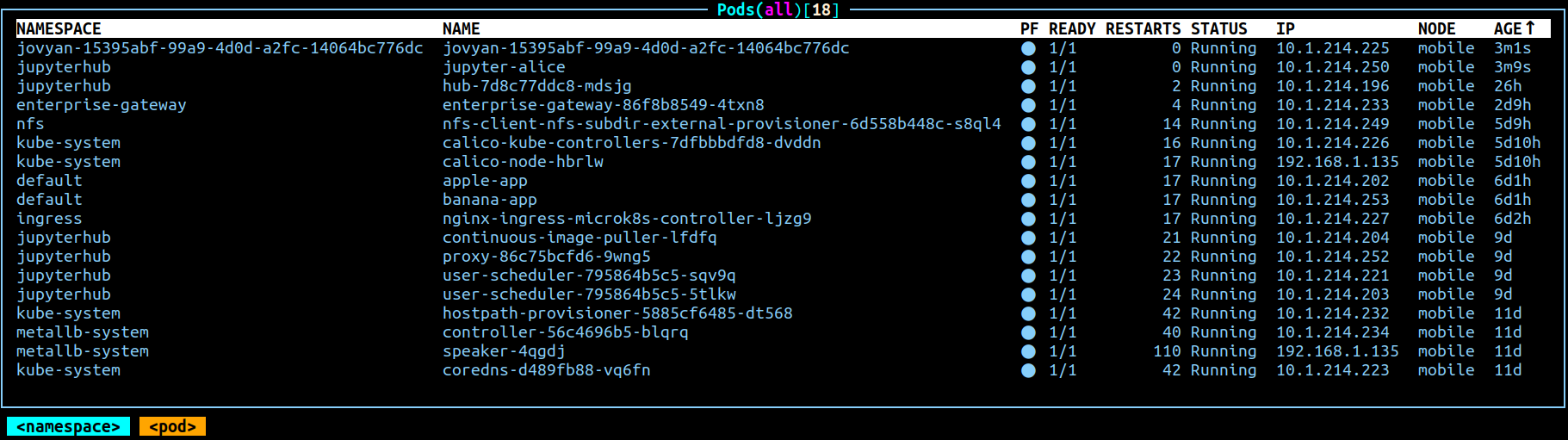

Deploy JEG to namespace enterprise-gateway

git clone https://github.com/jupyter-server/enterprise_gateway

mkdir eg

helm template --output-dir ./eg enterprise-gateway enterprise-gateway/etc/kubernetes/helm/enterprise-gateway -n enterprise-gateway -f jeg_customized_values.yaml

kubectl apply -f ./eg/enterprise-gateway/templates/

Copy kernelspecs and kernel-launcher sciprts and j2 templates to the NFS share that corresponds to pvc kernelspecs-pvc . After copy, the NFS share file /directory structure looks like:

.

└── python_kubernetes

├── kernel.json

└── scripts

├── kernel-pod.yaml.j2

└── launch_kubernetes.py

jeg_customized_values.yaml file contents:

mirrorWorkingDirs: true

service:

type: "LoadBalancer"

ports:

# The primary port on which Enterprise Gateway is servicing requests.

- name: "http"

port: 8888

targetPort: 8888

# The port on which Enterprise Gateway will receive kernel connection info responses.

- name: "http-response"

port: 8877

targetPort: 8877

deployment:

# Update CPU/Memory as needed

resources:

limits:

cpu: 1

memory: 5Gi

requests:

cpu: 1

memory: 2Gi

# Update to deploy multiple replicas of EG.

replicas: 1

# Give Enteprise Gateway some time to gracefully shutdown

terminationGracePeriodSeconds: 60

kernelspecsPvc:

enabled: true

name: kernelspecs-pvc

kip:

enabled: false

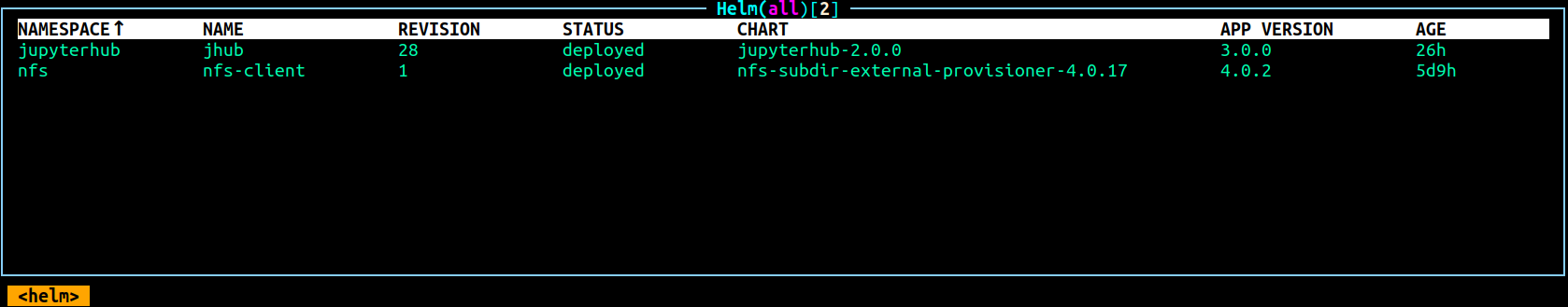

Helm deploy JHub to namespace jupyterhub

helm repo add jupyterhub https://jupyterhub.github.io/helm-chart/

helm repo update

helm install jhub jupyterhub/jupyterhub -f jhub_customized_values.yaml --version=2.0.0 -n jupyterhub

jhub_customized_values.yaml file contents:

hub:

db:

pvc:

accessModes:

- ReadWriteOnce

storage: 1Gi

storageClassName: ssd-hostpath

singleuser:

storage:

type: static

static:

pvcName: jhub-claim

subPath: "{username}"

capacity: 5Gi

networkPolicy:

enabled: false

image:

name: docker.io/jupyter/base-notebook

tag: hub-3.0.0

cmd:

- "sh"

- "-c"

- "env \"KERNEL_PATH=/home/nfs_share/claim/$JUPYTERHUB_USER\" jupyterhub-singleuser"

defaultUrl: "/lab"

extraEnv:

JUPYTER_GATEWAY_URL: "http://enterprise-gateway.enterprise-gateway:8888"

JUPYTERHUB_SINGLEUSER_APP: "jupyter_server.serverapp.ServerApp"

Explanation of jhub_customized_values.yaml customization:

Customize kernel.json file in kernelspecs’ nfs share

Customize python-kubernetes/kernel.json file in kernelspecs’ nfs share to include environment variables KERNEL_VOLUME_MOUNTS and KERNEL_VOLUMES. These variables will be read by python-kubernetes/scripts/launch_kubernetes.py script to render kernel pod yaml file which includes mount of a nfs share at the path as specified by environment variable KERNEL_PATH.

(When JHub launches a server for a user that connects to JEG ,KERNEL_PATH is one of enviornment variables that get passed to JEG. As KERNEL_VOLUMES in python-kubernetes/kernel.json makes reference to variable KERNEL_PATH, the kernel pod yaml file prepared by JEG will include a mount entry of NFS share at the path specified by KERNEL_PATH.)

This will make a user’s Jupyter server pod and kernel pod have a common nfs share mapped to their home directories ( ‘/home/jovyan’).

Contents of python_kubernetes/kernel.json

{

"language": "python",

"display_name": "Python on Kubernetes",

"metadata": {

"process_proxy": {

"class_name": "enterprise_gateway.services.processproxies.k8s.KubernetesProcessProxy",

"config": {

"image_name": "docker.io/elyra/kernel-py:dev"

}

},

"debugger": true

},

"env": {

"KERNEL_VOLUME_MOUNTS": "[{name: 'nfs-mount',mountPath: '/home/jovyan'}]",

"KERNEL_VOLUMES": "[{name: 'nfs-mount',nfs: {server: '172.17.0.1', path: $KERNEL_PATH}}]"

},

"argv": [

"python",

"/usr/local/share/jupyter/kernels/python_kubernetes/scripts/launch_kubernetes.py",

"--RemoteProcessProxy.kernel-id",

"{kernel_id}",

"--RemoteProcessProxy.port-range",

"{port_range}",

"--RemoteProcessProxy.response-address",

"{response_address}",

"--RemoteProcessProxy.public-key",

"{public_key}"

]

}